Key Takeaways

- AI search doesn’t rank pages; it recommends entities. If your firm doesn’t exist as a verified entity in the Knowledge Graph, you’re invisible regardless of your website’s SEO.

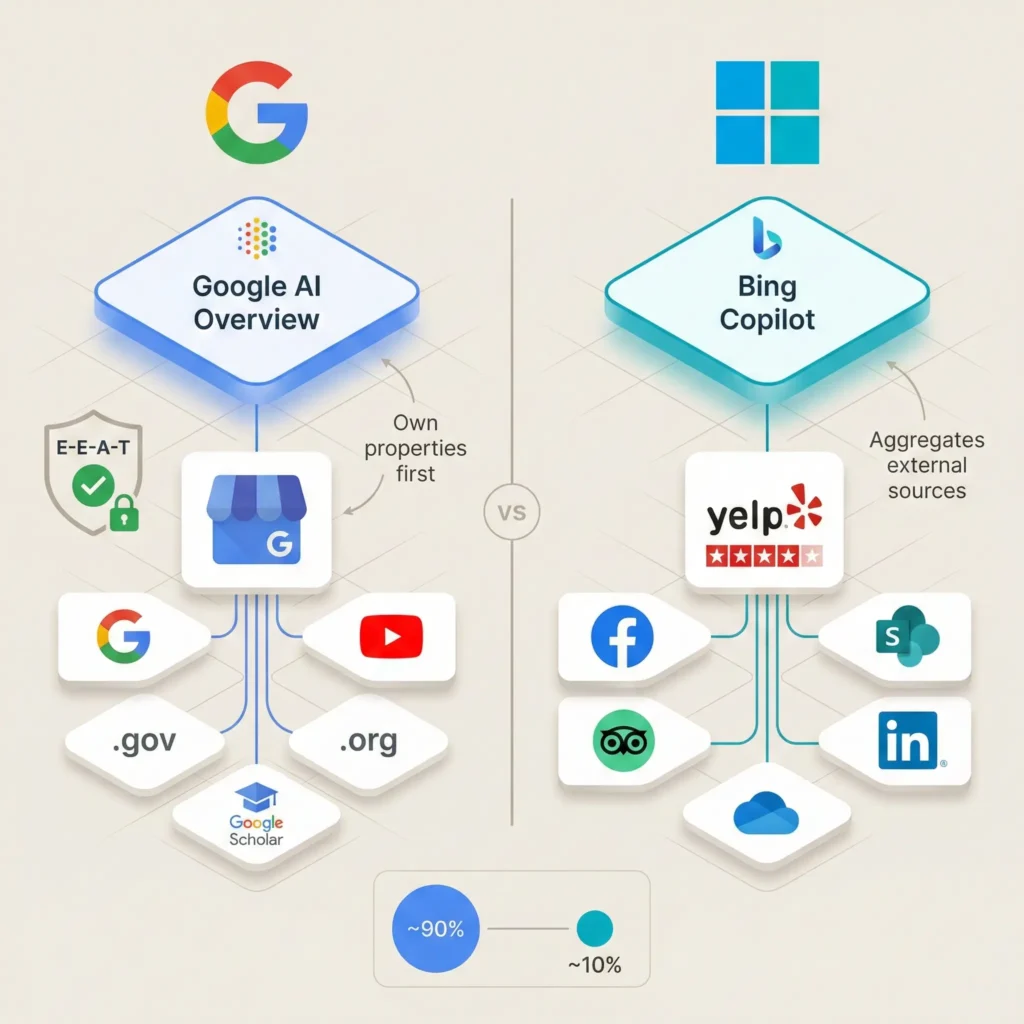

- Google and Bing use different “truth sets.” Google trusts Google Business Profile reviews. Bing pulls from Yelp and Facebook. Ignoring Yelp costs you ~10% of the search market.

- The tracking problem is real. Google doesn’t separate AI Overview traffic from organic; per SparkToro, 65% of searches end without a click, CTR drops 61% on informational queries. Use ZipTie, Semrush AI Visibility, or Ahrefs Brand Radar to track “Share of Voice.”

- The “sameAs” schema property is the most important code on your site. It merges confidence scores from State Bar, Avvo, Justia, and LinkedIn into your entity.

- Reviews are read, not counted. AI extracts specific attributes from review text; “kept me informed about my custody case” is worth more than “great lawyer!”

Table of contents

I spent 10 years inside a law firm (and managed budgets at Swatch Group), so I treat data accuracy like a financial audit. I spent way too long thinking SEO was about keywords and backlinks and page speed and all the technical stuff that used to matter. And it did matter; for the old system where Google showed you ten blue links and you picked one.

That system is dying.

Google AI Overviews and Bing Copilot don’t show you links anymore; they synthesize an answer from multiple sources and present it directly on the results page. The user never clicks through. They get the phone number from the AI summary and call. According to SparkToro data, 65% of searches now end without a click; and for informational queries where AI Overviews appear, CTR drops up to 61%.

Your website traffic stays flat but your phone rings more and you have no idea why. That’s the tracking problem nobody has solved.

This is the shift from “retrieval” to “synthesis.” And it changes everything about how law firm marketing actually works.

Why Your Website Doesn’t Matter (As Much As You Think)

Summary: AI search recommends entities, not websites. An “entity” is a verified thing in Google’s Knowledge Graph that exists across multiple trusted data sources. If your firm has conflicting data (different addresses on website vs. Google Business Profile vs. Data Axle), your confidence score drops and the AI excludes you to avoid hallucinating incorrect information.

The AI doesn’t rank your website. It recommends entities.

An “entity” is a verified thing in Google’s Knowledge Graph; a person, place, or organization that exists across multiple trusted data sources. When someone asks “best car accident lawyer in Miami,” the AI doesn’t scan websites for keywords. It queries the Knowledge Graph to find entities tagged as “Attorney” + “Personal Injury” + “Miami” and cross-references their confidence scores.

If your firm doesn’t exist as a clearly defined entity in that graph, you’re invisible. Doesn’t matter how good your website is.

The Confidence Score Problem

Google builds entity confidence by looking for consistency across trusted databases. If your website says “123 Main Street” but your Google Business Profile says “123 Main St.” and Data Axle says “125 Main Street,” the AI drops your confidence score.

Conflicting data = uncertainty. Uncertainty = exclusion from AI recommendations.

The AI would rather show nothing than risk hallucinating wrong information about a law firm.

The “Truth Sets” That Feed the AI

Summary: AI models prioritize three tiers of data: (1) Identity Data; State Bar records, Big Four directories (Avvo, Justia, Martindale, FindLaw), and data aggregators (Data Axle, Neustar, Foursquare); (2) Reputation Data; Google Business Profile reviews for Google, Yelp and Facebook for Bing; (3) Expertise Data; case law databases and niche directories (AILA, AAML, USPTO-related).

AI models don’t crawl the entire web equally. They prioritize specific high-trust repositories. I think of these as “truth sets”; the databases the AI actually believes.

Tier 1: Identity Data (Proves You Exist)

State Bar websites are the source of truth for licensure. The AI verifies that a “Person” entity is actually an “Attorney” by checking bar records. Full legal name, bar number, admission date, active status.

If your website says “Jon Doe” but the Bar says “Jonathan Q. Doe,” the entity link is weak.

The Big Four legal directories matter: Avvo (consumer law, Q&A corpus likely used as training data), Justia (links profiles to actual case law), Martindale-Hubbell (peer review ratings = E-E-A-T signal), FindLaw (massive repository ranking for millions of keywords).

Data aggregators feed location data to search engines: Data Axle, Neustar/Localeze, Foursquare. If you move offices and update Google but not Data Axle, Google may revert your address or flag you as suspicious.

Tier 2: Reputation Data (Proves You’re Trustworthy)

Google Business Profile reviews are gold standard for Google AI Overviews. The AI extracts keywords (“responsive,” “won my case”) to generate descriptions.

Bing pulls from Yelp and Facebook. A firm with 5.0 stars on Google but 3.0 on Yelp looks inferior on Bing Copilot. That’s ~10% of the search market.

Tier 3: Expertise Data (Proves You Know What You’re Doing)

Google Scholar indexes attorneys as counsel of record. If your name appears on appellate decisions, the AI links your entity to specific legal issues.

Niche directories signal specialization: AILA for immigration, AAML for family law, USPTO related databases for IP.

Related: Learn what we did for one of our clients that got results

Google vs. Bing: They Trust Different Sources

Summary: Google is Knowledge Graph-centric; prioritizes its own properties (GBP, YouTube), applies strict E-E-A-T filters for YMYL queries, prefers .gov and .org domains. Bing relies on third-party partnerships (Yelp, Facebook, TripAdvisor) and integrates with Microsoft 365, creating a “private search” visibility tier based on internal documents and emails.

Google AI Overviews

Google prioritizes its own properties; Google Business Profile for local data, YouTube for informational queries. For legal topics (classified as YMYL), it applies strict E-E-A-T filters and prefers .gov and .org domains.

Google’s AI is tuned to be conservative. It would rather exclude you than risk being wrong.

Bing Copilot

Bing relies more on third-party partnerships. It aggregates reviews from Yelp, Facebook, and TripAdvisor.

Bing also integrates with Microsoft 365; for corporate clients, Copilot searches internal emails and SharePoint alongside the public web. If your firm’s documents are already in a potential client’s internal system, Bing can surface you based on that relationship, not just public SEO.

The Practical Implication

You need presence on both ecosystems. A Google-only strategy ignores ~10% of search. A strategy that ignores Yelp gets crushed on Bing Copilot.

The Schema Code That Actually Matters

Summary: Use LegalService as the parent type, Attorney (subtype of Person) for individual pages. Three critical properties: (1) sameAs; links to State Bar, Avvo, Justia, LinkedIn to merge confidence scores; (2) knowsAbout; explicitly lists practice areas for topic modeling; (3) alumniOf; reinforces education credentials. FAQPage schema dramatically increases probability of text being lifted into AI Overviews.

Schema markup is JSON-LD code that tells the AI what your content means. Most law firm websites either have no schema or use generic LocalBusiness markup, which is wrong.

The Correct Structure

Use LegalService as the parent type for the firm. Use Attorney (a subtype of Person) for individual practitioner pages, nested within LegalService.

The Three Properties That Matter

sameAs; the most important property for entity SEO. It’s a list of URLs that refer to the same entity: State Bar profile, Avvo, Justia, LinkedIn. This tells the AI: “The entity on this page is the exact same entity verified at these trusted locations.” It merges confidence scores.

knowsAbout; explicitly lists concepts the attorney is expert in (“Personal Injury,” “Product Liability”). Maps directly to the AI’s topic modeling.

alumniOf; links to the law school. Reinforces education credentials for E-E-A-T.

FAQPage Schema for AI Extraction

AI Overviews are question-answering engines. Every practice area page should have an FAQ section wrapped in FAQPage schema. This dramatically increases the probability of your text being “lifted” into the AI response.

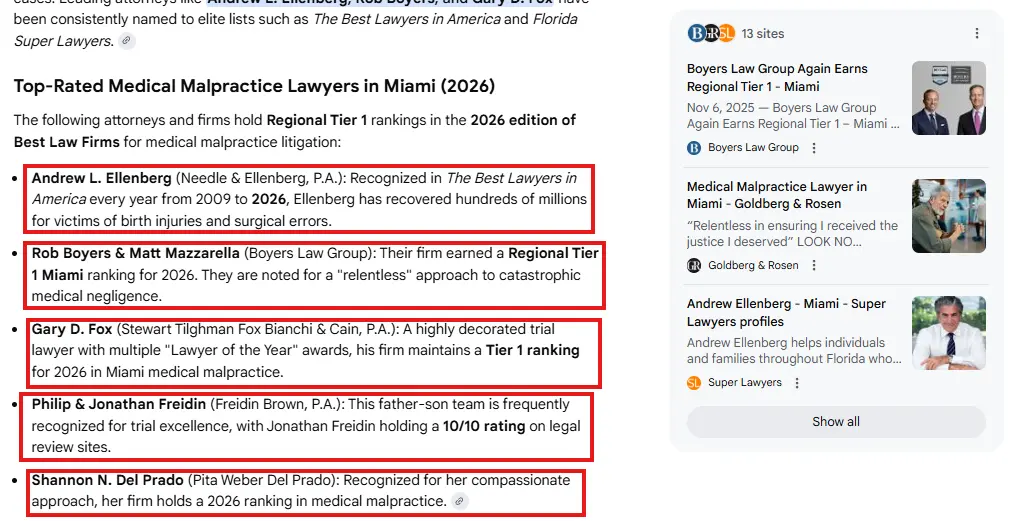

How to Write Content the AI Will Actually Use

Summary: Use “answer-first” methodology; start every section with a 40-60 word definitional block sized for AI extraction. Structure case results as Problem-Action-Result (P-A-R) with specific legal challenges, strategies, and geo-tagged outcomes. Build topic clusters with pillar pages linked to 5-10 spoke pages answering specific questions.

The old SEO playbook was 2,000-word guides stuffed with keywords. The new playbook is “answer-first”; concise, structured, and citable.

The 40-60 Word Definition

Start every major section with a direct answer that’s perfectly sized for the AI to scrape:

“In Florida, the statute of limitations for medical malpractice is two years from the date the incident was discovered, or should have been discovered, with an absolute four-year cap from the date of the incident. Exceptions apply for fraud, concealment, and cases involving minors.”

That block is exactly what the AI wants to display in the overview.

The P-A-R Framework for Case Results

Most case results pages are useless: “$1 Million Settlement; Car Accident.” The AI can’t learn expertise from that.

Structure results as Problem-Action-Result:

- Problem: “Liability was disputed because the client was crossing outside the crosswalk”

- Action: “We retained a forensic accident reconstruction expert who proved the driver was traveling 15 mph over the limit”

- Result: “Full policy limit settlement of $100,000 in Miami-Dade County”

Now the AI has specific hooks to associate your firm with niche queries.

Topic Clusters, Not Giant Pages

Instead of one massive “Divorce” page, create a pillar page linked to 5-10 spoke pages: “Alimony calculation in Florida,” “Divorce timeline FL,” “Child custody for unmarried fathers FL.”

This interlinked structure tells the Knowledge Graph you cover the entirety of the topic.

Related: How we structure content for AI

Reviews Are Read Now, Not Just Counted

Summary: AI performs Aspect-Based Sentiment Analysis; extracts tuples like {Entity: Law Firm X, Attribute: Responsiveness, Sentiment: Positive}. “They kept me informed about my custody case every week” provides more data than “Great lawyer!” Review velocity (rate of new reviews) signals active practice. Owner responses are also indexed; use them to reinforce expertise.

AI models don’t just count stars. They perform “Aspect-Based Sentiment Analysis”; they read the actual text and extract specific attributes.

The Tuple Concept

The AI extracts data tuples like {Entity: Law Firm X, Attribute: Responsiveness, Sentiment: Positive}.

A review that says “Great lawyer!” provides almost no data. A review that says “They kept me informed about my custody case every week and explained the process clearly” provides multiple data points linking the firm to case types and service attributes.

Review Velocity Matters

100 reviews from 2022 is less valuable than 50 reviews from 2025. “Review velocity”; the rate of new reviews; signals an active, thriving practice. Sudden spikes trigger spam filters. Steady drip signals organic growth.

Your Response Gets Indexed Too

When you reply to a review, that text is also indexed. Use responses to reinforce expertise: “We were glad to help you resolve your complex probate matter involving out-of-state assets…”

Related: How we generate keyword rich reviews

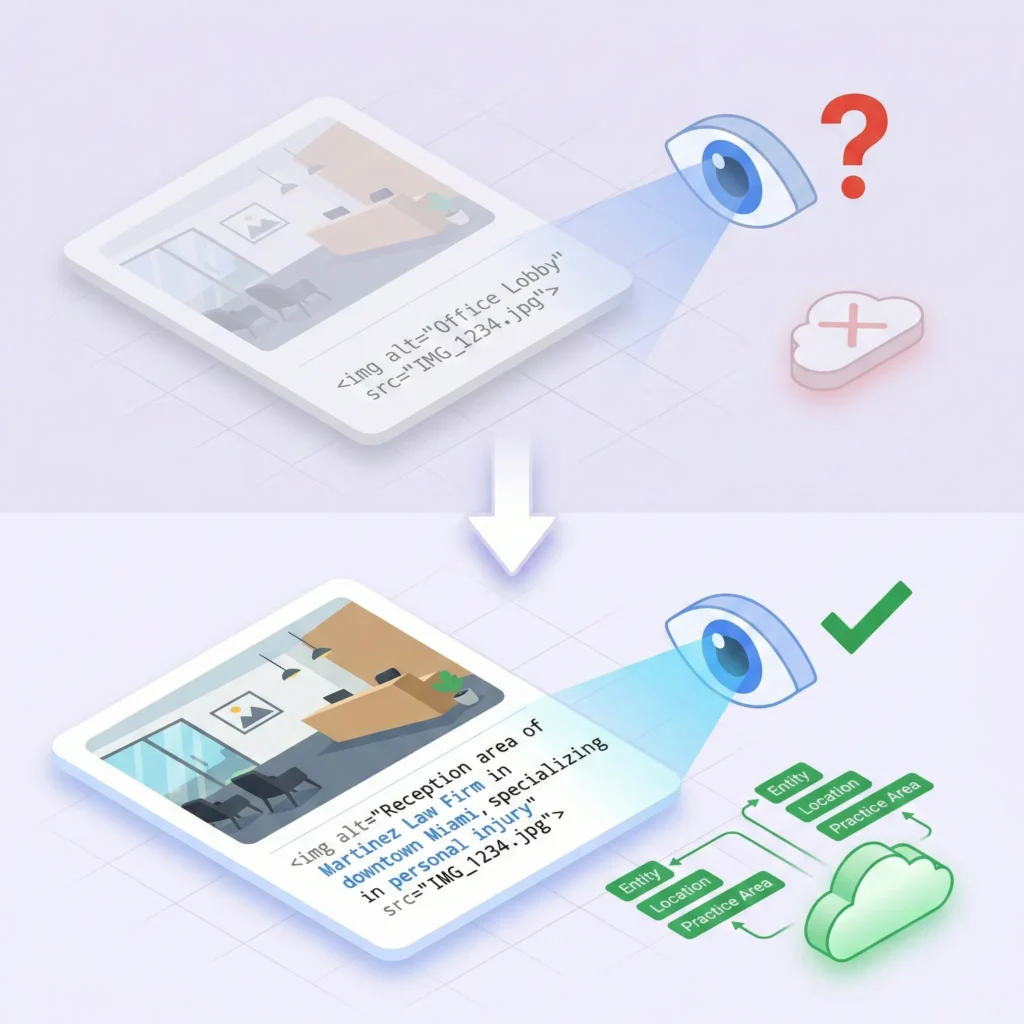

The Images and Videos the AI Actually Sees

Summary: Google Vision AI detects stock photography; stock images are devalued as “low information.” Real photos verify physical existence. Alt text should function as AI prompts (“Reception area of Smith Law Firm in downtown Miami, specializing in personal injury”). YouTube videos surface in AI Overviews; use chapters to enable deep-linking to specific timestamps.

Google’s Vision AI analyzes images. It can detect stock photography; and stock images are devalued as “low information.”

Real photos of the attorney, office exterior, and team signal authenticity and help the AI verify the physical existence of the entity.

Alt Text as AI Prompts

Treat alt text not as accessibility boilerplate but as a prompt for the AI. Instead of “Office Lobby,” use “Reception area of Smith Law Firm in downtown Miami, specializing in personal injury.”

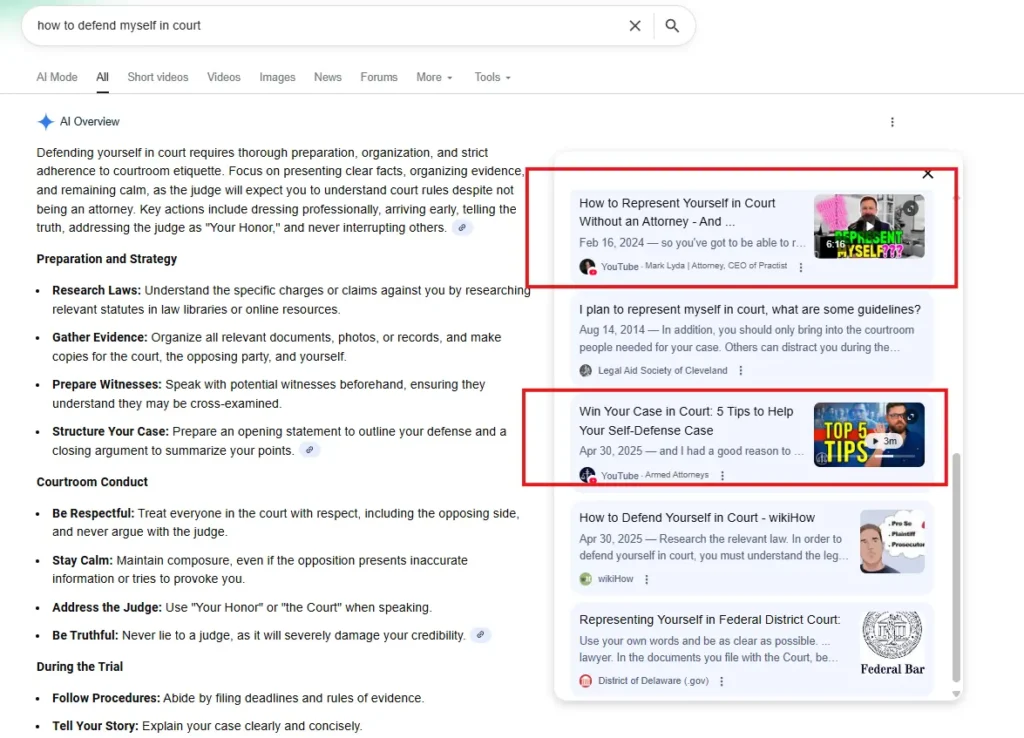

YouTube for Procedural Queries

YouTube is a massive data source for Google’s AI. “How-to” videos frequently surface in AI Overviews.

Use YouTube chapters to mark specific segments (“0:45; What is the statute of limitations?”). The AI can deep-link directly to that timestamp.

Related: How we optimize visual assets

The Tracking Problem Nobody Has Solved

Summary: Google Search Console and GA4 commingle AI Overview traffic with traditional organic traffic; there’s no filter to separate them. AI Overviews trigger on ~15-20% of all queries (88% informational), causing CTR drops up to 61%. Third-party tools (ZipTie, Semrush AI Visibility, Ahrefs Brand Radar) use SERP parsing to track “Share of Voice” in AI answers. For GSC, use regex patterns to isolate question-based queries as a proxy for AI Overview activity.

This is the part that drives everyone crazy: Google doesn’t tell you which traffic came from AI Overviews.

Google Search Console and GA4 commingle data from traditional “blue link” results with data from AI Overviews. There’s no dedicated filter, no API endpoint, no way to isolate traffic specifically from AI citations. A site owner sees 10,000 visits from “Google Organic”; but 3,000 might have come from AI Overview citations and 7,000 from traditional links. You can’t tell which is which.

The Numbers Are Brutal

AI Overviews trigger on approximately 15-20% of all queries. But it’s not evenly distributed; 88% of queries triggering AI Overviews are informational. For industries like healthcare, legal, and “how-to” content, exposure to AI Overviews is near-total.

The CTR collapse is real: organic click-through rates drop up to 61% for informational queries where AI Overviews are present. Users read the synthesized answer and leave without clicking anything.

The “Great Decoupling”

Historically, impressions correlated with clicks. If visibility went up, traffic went up. That correlation has fractured. Impressions now measure “Brand Exposure” rather than “Traffic Potential.”

A query like “how to file for divorce in Florida” might show massive impression volume but <1% CTR; because users get the answer from the AI and never click through. An uneducated stakeholder sees collapsing CTR and assumes the content is failing, when actually it’s so successful the AI chose it to answer the question directly.

The Third-Party Solutions

Since Google won’t provide the data, tools have emerged to track AI visibility through SERP parsing:

ZipTie.dev; captures the actual HTML of AI Overviews, performs “entity matching” to identify if your brand is mentioned even without a link. Tracks mentions (text), not just citations (links). Provides screenshots as visual proof since AI results are personalized and transient.

Semrush AI Visibility Toolkit; tracks visibility across Google AI Overviews, ChatGPT, and Perplexity. Provides sentiment analysis (how the AI talks about your brand) and lets you track specific high-value prompts daily.

Ahrefs Brand Radar; aggregates citation flows to show which specific pages on your site are fueling AI answers. Strong for competitor gap analysis; queries where competitors are cited but you’re not.

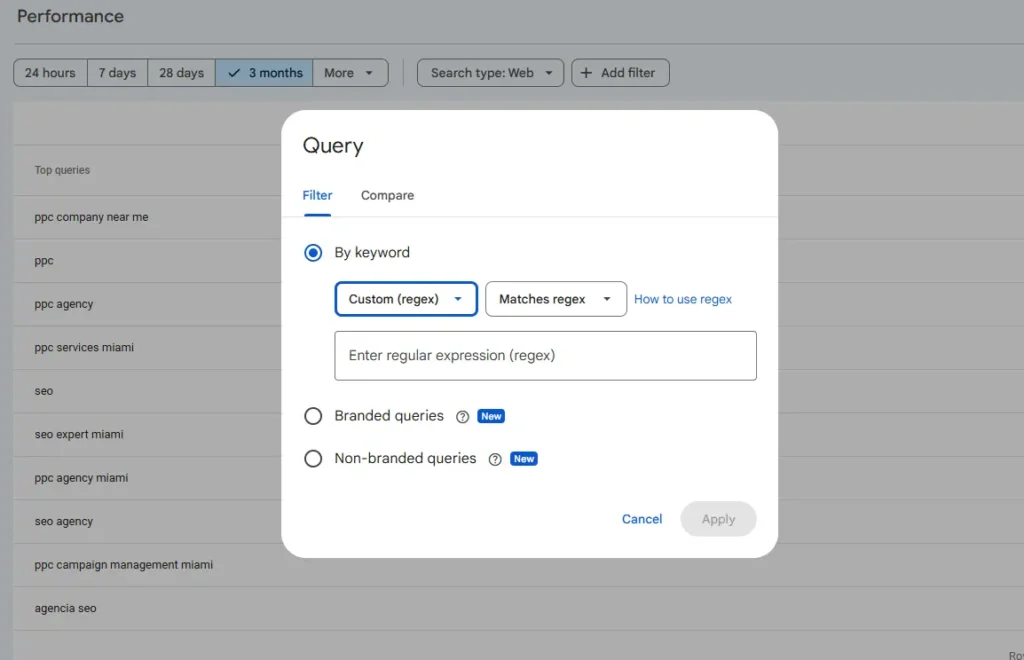

The GSC Regex Workaround

Since GSC doesn’t have an AI Overview filter, use Regular Expressions to isolate queries that statistically correlate with high AI Overview triggering:

Pattern for question-based queries:

^(who|what|where|when|why|how|which|can|does|do|is|are|should|would|will|did|was|were)

This filters for queries starting with question words; your “informational candidates” where AI Overviews are most likely.

Pattern for long-tail queries (7+ words):

([^ ]*\s){7,}?

Complex queries trigger AI Overviews more frequently.

The “Phantom Traffic” Diagnostic:

Filter for question patterns, sort by impressions (high to low), compare CTR to historical averages. Queries with massive impressions but <1% CTR (where you rank in Top 5) are primary candidates for “AI Cannibalization.”

The Lift Methodology

Since direct attribution is impossible, measure correlation:

- Monitor impression trends for your “question query” segment in GSC

- Overlay with Direct Traffic in GA4

- If AI Visibility (impressions) increases and Direct Traffic increases simultaneously, users are likely reading AI answers and then visiting your site directly or searching your brand name later

For local businesses, correlate impression spikes on “near me” queries with call tracking data (CallRail). This closes the attribution loop; proving the AI Overview drove business value even without website sessions.

Related: How we track AI visibility for clients

The Bottom Line

Summary: Entity health requires four pillars: (1) Data consistency; NAP identical everywhere, name matches bar records character-for-character; (2) Review sentiment; specific, keyword-rich reviews mentioning case types; (3) Schema coverage; LegalService and Attorney markup with sameAs linking to verified profiles; (4) Topical authority; dense clusters of interlinked content. The metric shifts from “traffic” to “Share of Influence”; being cited matters more than being clicked.

The firms that win in AI search understand this isn’t about websites anymore. It’s about entities.

Your digital existence is a data layer that feeds the AI. The consistency of that data determines whether the AI trusts you enough to recommend you.

The Four Pillars of Entity Health

- Data consistency; NAP (Name, Address, Phone) identical everywhere, name matches bar records character-for-character

- Review sentiment; specific, keyword-rich reviews that mention case types and service attributes

- Schema coverage; LegalService and Attorney markup with sameAs linking to all verified profiles

- Topical authority; dense clusters of interlinked content proving expertise in specific practice areas

Weakness in any pillar degrades the whole. Great content but conflicting addresses? Excluded from local results. Great reviews but no schema? Missed by crawlers looking for LegalService entities.

The Metric Shift

The era of “counting clicks” is ending. The era of “measuring influence” has begun.

A “zero-click” is not a failure; it’s a touchpoint. When 65% of searches end without a click (per SparkToro), the definition of success shifts from “site visit” to “brand impression.” You need to track how often you’re cited in AI answers, even if no click results.

The goal isn’t to be found. It’s to be cited.

Related: See how we’ve done this for other firms